from operator import invert

from fastcore.basics import *

from fastai.vision.all import *

from fastai.torch_basics import *

from torch._C import dtype

import libs.images2chips

import sys

import os

from skimage import io

from glob import glob

from tqdm import tqdm_notebook as tqdm

from sklearn.metrics import confusion_matrix

import random

import itertools

# Matplotlib

import matplotlib.pyplot as plt

import seaborn as sns## basics

set_seed(105)

train_a_path = Path('/home/ubuntu/data/dronedeploy/dataset-medium/image')

label_a_path = Path('/home/ubuntu/data/dronedeploy/dataset-medium/labels')

elev_path = Path('/home/ubuntu/data/dronedeploy/dataset-medium/elevations')

imgNames = get_image_files(train_a_path)

lblNames = get_image_files(label_a_path)

eleNames = get_image_files(elev_path)eleFileNameA = eleNames[0]

eleFile = PILMask.create(eleFileNameA)

# eleFile.show(cmap='tab20')

np.unique(eleFile)/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (166788306 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33,

34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50,

51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67,

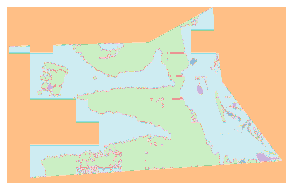

68, 69, 70, 71, 72], dtype=uint8)lblFileNameA = lblNames[random.randint(1, 19)]

lblFile = PILMask.create(lblFileNameA)

lblFile.show(cmap='tab20')

np.unique(lblFile)/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (97318535 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(array([ 81, 99, 105, 132, 155, 255], dtype=uint8)

def imageChipGet(dataset):

image_chips = f'{dataset}/image-chips'

label_chips = f'{dataset}/label-chips'

if not os.path.exists(image_chips) and not os.path.exists(label_chips):

print("creating chips")

libs.images2chips.run(dataset)

else:

print(

f'chip folders "{image_chips}" and "{label_chips}" already exist, remove them to recreate chips.')

# imageChipGet('dataset-medium')## Gray or RGB

paletteISPRS = {0: (255, 255, 255), # Impervious surfaces (white)

1: (0, 0, 255), # Buildings (blue)

2: (0, 255, 255), # Low vegetation (cyan)

3: (0, 255, 0), # Trees (green)

4: (255, 255, 0), # Cars (yellow)

5: (255, 0, 0), # Clutter (red)

6: (0, 0, 0)} # Undefined (black)

paletteDDSG = {

0: (230, 25, 75), # BUILDING

1: (145, 30, 180), # CLUTTER

2: (60, 180, 75), # VEGETATION

3: (245, 130, 48), # WATER

4: (255, 255, 255), # GROUND

5: (000, 130, 200), # CAR

6: (255, 000, 255), # IGNORE

7: (0, 0, 0) # Undefined (black)

}

paletteDDSG2 = {

0: (75, 25, 230), # BUILDING

1: (180, 30, 145), # CLUTTER

2: (75, 180, 60), # VEGETATION

3: (48, 130, 245), # WATER

4: (255, 255, 255), # GROUND

5: (200, 130, 0), # CAR

6: (255, 000, 255), # IGNORE

7: (0, 0, 0) # Undefined (black)

}

# convert to gray scale labels

def getGrayScaleValue(palette):

result = {}

for i, o in (palette).items():

R = o[0]

G = o[1]

B = o[2]

y = R * 299 / 1000 + G * 587 / 1000 + B * 114 / 1000

result[i] = round(y)

# result.append(int(y))

return result

getGrayScaleValue(paletteDDSG){0: 92, 1: 81, 2: 132, 3: 155, 4: 255, 5: 99, 6: 105, 7: 0}# 从灰度值进行转化

def converFromGray(lblname, palette):

# 先获取mask值,而不是RGB

label = PILMask.create(lblname)

labelArray = np.array(label)

paletteGray = getGrayScaleValue(palette)

# 需要重新定义一个新的全为0的数组,尽量不在原有的labelArray上直接进行像素的对应性修改,避免labelArray[labelArray == o] = i这样的写法,因为(0,0,0)的原因,可能回混淆,不知道是映射后得到的(0,0,0),还是原来图像中的(0,0,0)

arr_2d = np.zeros(

(labelArray.shape[0], labelArray.shape[1]), dtype=np.uint8)

for i, o in paletteGray.items():

arr_2d[labelArray == o] = i

# print(np.unique(labelArray))

return PILMask.create(arr_2d)

temp = converFromGray(lblNames[3], paletteDDSG)

np.unique(temp)array([0, 1, 2, 3, 4, 5, 6], dtype=uint8)# 此处的转换还有第二种写法

# https://github.com/damminhtien/deepnet-for-semantic-labeling-photogrammetry/blob/master/Insight-data-potsdam.ipynb

# 从RGB值进行转化

# 首先获取反向的颜色对应盘

def getInverPalette(palette):

inverted = {}

for k, v in palette.items():

inverted[v] = k

return inverted

# getInverPalette(paletteDDSG)

def converFromRGB(lblname, palette):

# 先获取mask值,而不是RGB

label = PILImage.create(lblname)

labelArray = np.array(label)

invertP = getInverPalette(palette)

print(np.unique(labelArray))

arr_2d = np.zeros(

(labelArray.shape[0], labelArray.shape[1]), dtype=np.uint8)

for i, o in invertP.items():

# axis = 2, 使得可以在RGB三个数值上进行比较

# reshape 使得从(3,)变换为(1,1,3)的维度

arr_2d[np.all(labelArray == np.array(i).reshape(1, 1, 3), axis=2)] = o

return PILMask.create(arr_2d)

temp = converFromRGB(lblNames[2], paletteDDSG)

np.unique(temp)[ 0 25 30 48 60 75 130 145 180 200 230 245 255]array([0, 1, 2, 3, 4, 5, 6], dtype=uint8)analysis of class components

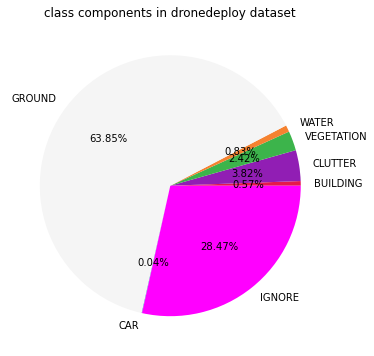

# 开始画大饼

labels = ['BUILDING', 'CLUTTER', 'VEGETATION',

'WATER', 'GROUND', 'CAR', 'IGNORE']

colrDDSG = ['#e6194b', '#911eb4', '#3cb44b',

'#f58230', 'whitesmoke', '#0082c8', '#ff00ff']

colrISPRS = ['whitesmoke', '#0000ff', '#00FFFF',

'#00FF00', '#FFFF00', '#FF0000']

# 组装一个大饼函数

def plotPieChart(lblname, palette, title='dataset', draw=True):

# n_pixel用来统计每个类别像素的占比,pixelCount 用来统计所有的像素点

pixelCount = []

if palette == paletteDDSG:

n_pixel = [0, 0, 0, 0, 0, 0, 0]

n_all_pixel = [0, 0, 0, 0, 0, 0, 0]

colors = colrDDSG

label = ['BUILDING', 'CLUTTER', 'VEGETATION',

'WATER', 'GROUND', 'CAR', 'IGNORE']

else:

n_pixel = [0, 0, 0, 0, 0, 0]

n_all_pixel = [0, 0, 0, 0, 0, 0]

label = ['Impervious Surface', 'Buildings', 'VEGETATION',

'Tree', 'Cars', 'Clutter']

colors = colrISPRS

_temp = converFromGray(lblname=lblname, palette=palette)

# np.unique(_temp)

imageArray = image2tensor(_temp).squeeze(0)

num, counts = np.unique(imageArray, return_counts=True)

for i in range(len(num)):

n_pixel[num[i]] = counts[i]

pixelCount = n_pixel

n_pixel = np.round(n_pixel/np.sum(n_pixel), 8)

if palette == paletteDDSG:

colors = colrDDSG

else:

colors = colrISPRS

if draw is True:

fig, ax = plt.subplots(figsize=(6, 6))

ax.pie(n_pixel.tolist(), labels=label,

autopct='%1.2f%%', colors=colors)

ax.set_title(f"class components in {title} dataset")

return n_pixel, pixelCount

aa, _ = plotPieChart(lblNames[random.randint(1, 20)],

paletteDDSG, 'dronedeploy')

# 获得所有label的统计数据

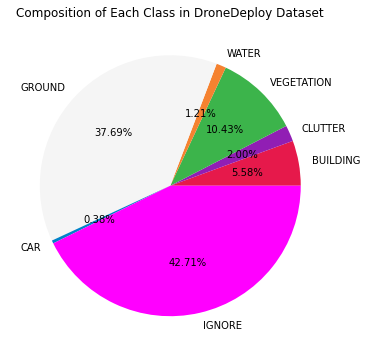

def get_all_piestatics(palette, lblNames, title='dataset'):

if palette == paletteDDSG:

n_pixel = [0, 0, 0, 0, 0, 0, 0]

n_all_pixel = [0, 0, 0, 0, 0, 0, 0]

pixelAllCount = [0, 0, 0, 0, 0, 0, 0]

colors = colrDDSG

label = ['BUILDING', 'CLUTTER', 'VEGETATION',

'WATER', 'GROUND', 'CAR', 'IGNORE']

else:

n_pixel = [0, 0, 0, 0, 0, 0]

n_all_pixel = [0, 0, 0, 0, 0, 0]

pixelAllCount = [0, 0, 0, 0, 0, 0]

label = ['Impervious Surface', 'Buildings', 'VEGETATION',

'Tree', 'Cars', 'Clutter']

colors = colrISPRS

for i in lblNames:

n_pixel, pixelCount = plotPieChart(i, palette, draw=False)

for j in range(len(n_pixel)):

n_all_pixel[j] += n_pixel[j]

pixelAllCount[j] += pixelCount[j]

n_all_pixel = np.round(n_all_pixel/np.sum(n_all_pixel), 8)

if palette == paletteDDSG:

colors = colrDDSG

else:

colors = colrISPRS

fig, ax = plt.subplots(figsize=(6, 6))

ax.pie(n_all_pixel.tolist(), labels=label,

autopct='%1.2f%%', colors=colors)

ax.set_title(f"Composition of Each Class in {title} Dataset")

return n_all_pixel, pixelAllCount

cc, dd = get_all_piestatics(paletteDDSG, lblNames, 'DroneDeploy')/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (125653964 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (166788306 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (97318535 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (106335837 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (136621384 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (122397080 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (161252550 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

/home/ubuntu/miniconda3/envs/new/lib/python3.8/site-packages/PIL/Image.py:2847: DecompressionBombWarning: Image size (91261544 pixels) exceeds limit of 89478485 pixels, could be decompression bomb DOS attack.

warnings.warn(

# get the statics for Potsdam dataset

data_path = Path('/home/ubuntu/.fastai/data/isprs/')

path_img = data_path / 'Potsdam/2_Ortho_RGB/train_pick'

path_lbl = data_path / 'Potsdam/5_Labels_for_participants'

imgNames = get_image_files(path_img)

lbl_names = get_image_files(path_lbl)

aa, bb = get_all_piestatics(paletteISPRS, lbl_names, 'Potsdam')aa = array([0.28464172, 0.26721742, 0.23536882, 0.14624186, 0.01689545,0.04963473]) bb = [245930445, 230875852, 203358663, 126352970, 14597667, 42884403] cc = array([0.05577413, 0.01997206, 0.10434894, 0.01207262, 0.37689098,0.00380131, 0.42713996]) dd = [134082091, 30949408, 277077545, 35463163, 860128086, 8950954, 1088831663] ee = array([0.27606349, 0.26086128, 0.21335261, 0.231575 , 0.01192941,0.00621821]) ff = [21815349, 20417332, 16272917, 18110438, 945687, 526083]

# get the statics for Vaihingen dataset

data_path = Path('/home/ubuntu/.fastai/data/isprs/')

path_img = data_path / 'Vaihingen/images'

path_lbl = data_path / 'Vaihingen/label'

imgNames = get_image_files(path_img)

lbl_names = get_image_files(path_lbl)

# ee,ff = get_all_piestatics(paletteISPRS, lbl_names, 'Vaihingen')